In computing, a redundant array of independent disks , also known as redundant array of inexpensive disks (commonly abbreviated RAID) is a system which uses multiple hard drives to share or replicate data among the drives. Depending on the version chosen, the benefit of RAID is one or more of increased data integrity, fault-tolerance, throughput or capacity compared to single drives. In its original implementations (in which it was an abbreviation for "redundant array of inexpensive disks"), its key advantage was the ability to combine multiple low-cost devices using older technology into an array that offered greater capacity, reliability, speed, or a combination of these things, than was affordably available in a single device using the newest technology.

At the very simplest level, RAID combines multiple hard drives into a single logical unit. Thus, instead of seeing several different hard drives, the operating system sees only one. RAID is typically used on server computers, and is usually (but not necessarily) implemented with identically-sized disk drives. With decreases in hard drive prices and wider availability of RAID options built into motherboard chipsets, RAID is also being found and offered as an option in more advanced user computers. This is especially true in computers dedicated to storage-intensive tasks, such as video and audio editing.

The original RAID specification suggested a number of prototype "RAID levels", or combinations of disks. Each had theoretical advantages and disadvantages. Over the years, different implementations of the RAID concept have appeared. Most differ substantially from the original idealized RAID levels, but the numbered names have remained. This can be confusing, since one implementation of RAID 5, for example, can differ substantially from another. RAID 3 and RAID 4 are often confused and even used interchangeably.

The very definition of RAID has been argued over the years. The use of the term redundant leads many to object to RAID 0 being called a RAID at all. Similarly, the change from inexpensive to independent confuses many as to the intended purpose of RAID. There are even some single-disk implementations of the RAID concept. For the purpose of this article, we will say that any system which employs the basic RAID concepts to combine physical disk space for purposes of reliability, capacity, or performance is a RAID system.

Contents

|

History

Norman Ken Ouchi at IBM was awarded U.S. Patent 4,092,732 titled "System for recovering data stored in failed memory unit" in 1978 and the claims for this patent describe what would later be termed RAID 5 with full stripe writes. This 1978 patent also mentions that disk mirroring or duplexing (what would later be termed RAID 1) and protection with dedicated parity (what would later be termed RAID 4) were prior art at that time.

In 1988, RAID levels 1 through 5 were formally defined by David A. Patterson, Garth A. Gibson and Randy H. Katz in the paper, "A Case for Redundant Arrays of Inexpensive Disks (RAID)" . This was published in the SIGMOD Conference 1988: pp 109–116. The term "RAID" started with this paper.

It was a particularly ground-breaking work in that the concepts are both novel and "obvious" in retrospect once they had been described. This paper spawned the entire disk array industry.

RAID implementations

Hardware vs. software

RAID can be implemented either in dedicated hardware or custom software running on standard hardware. Additionally, there are hybrid RAIDs that are partly software- and partly hardware-based solutions.

With a software implementation, the operating system manages the disks of the array through the normal drive controller (IDE/ATA , SATA, SCSI, Fibre Channel, etc.). With present CPU speeds, software RAID can be faster than hardware RAID, though at the cost of using CPU power which might be best used for other tasks. One major exception is where the hardware implementation of RAID incorporates a battery backed-up write back cache which can speed up an application, such as an OLTP database server. In this case, the hardware RAID implementation flushes the write cache to secure storage to preserve data at a known point if there is a crash. The hardware approach is faster than accessing the disk drive and limited by RAM speeds, the rate at which the cache can be mirrored to another controller, the amount of cache and how fast it can flush the cache to disk. For this reason, battery-backed caching disk controllers are often recommended for high transaction rate database servers. In the same situation, the software solution is limited to no more flushes than the number of rotations or seeks per second of the drives. Another disadvantage of a pure software RAID is that, depending on the disk that fails and the boot arrangements in use, the computer may not be able to be rebooted until the array has been rebuilt.

A hardware implementation of RAID requires at a minimum a special-purpose RAID controller . On a desktop system, this may be a PCI expansion card, or might be a capability built in to the motherboard. In larger RAIDs, the controller and disks are usually housed in an external multi-bay enclosure. The disks may be IDE, ATA, SATA, SCSI, Fibre Channel, or any combination thereof. The controller links to the host computer(s) with one or more high-speed SCSI, Fibre Channel or iSCSI connections, either directly, or through a fabric, or is accessed as network attached storage. This controller handles the management of the disks, and performs parity calculations (needed for many RAID levels). This option tends to provide better performance, and makes operating system support easier. Hardware implementations also typically support hot swapping, allowing failed drives to be replaced while the system is running. In rare cases hardware controllers have become faulty, which can result in data loss. Hybrid RAIDs have become very popular with the introduction of inexpensive hardware RAID controllers. The hardware is a normal disk controller that has no RAID features, but there is a boot-time application that allows users to set up RAIDs that are controlled via the BIOS. When any modern operating systems are used, they will need specialized RAID drivers that will make the array look like a single block device. Since these controllers actually do all calculations in software, not hardware, they are often called "fakeraids". Unlike software RAID, these "fakeraids" typically cannot span multiple controllers.

Both hardware and software versions may support the use of a hot spare, a preinstalled drive which is used to immediately (and almost always automatically) replace a failed drive. This reduces the mean time to repair period during which a second drive failure in the same RAID redundancy group can result in loss of data.

Some software RAID systems allow building arrays from partitions instead of whole disks. Unlike Matrix RAID they are not limited to just RAID 0 and RAID 1 and not all partitions have to be RAID.

Standard RAID levels

RAID 0

A RAID 0 (also known as a striped set) splits data evenly across two or more disks with no parity information for redundancy. It is important to note that RAID 0 was not one of the original RAID levels, and is not redundant. RAID 0 is normally used to increase performance, although it can also be used as a way to create a small number of large virtual disks out of a large number of small physical ones. A RAID 0 can be created with disks of differing sizes, but the storage space added to the array by each disk is limited to the size of the smallest disk—for example, if a 120 GB disk is striped together with a 100 GB disk, the size of the array will be 200 GB. Although RAID 0 was not specified in the original RAID paper, an idealized implementation of RAID 0 would split I/O operations into equal-sized blocks and spread them evenly across two disks. RAID 0 implementations with more than two disks are also possible, however the reliability of a given RAID 0 set is equal to the average reliability of each disk divided by the number of disks in the set. That is, reliability (as measured by mean time to failure (MTTF) or mean time between failures (MTBF)) is roughly inversely proportional to the number of members—so a set of two disks is roughly half as reliable as a single disk. The reason for this is that the file system is distributed across all disks. When a drive fails the file system cannot cope with such a large loss of data and coherency since the data is "striped" across all drives. Data can be recovered using special tools. However, it will be incomplete and most likely corrupt.

While the block size can technically be as small as a byte it is almost always a multiple of the hard disk sector size of 512 bytes. This lets each drive seek independently when randomly reading or writing data on the disk. If all the accessed sectors are entirely on one disk then the apparent seek time would be the same as a single disk. If the accessed sectors are spread evenly among the disks then the apparent seek time would be reduced by half for two disks, by two-thirds for three disks, etc., assuming identical disks. For normal data access patterns the apparent seek time of the array would be between these two extremes. The transfer speed of the array will be the transfer speed of all the disks added together, limited only by the speed of the RAID controller.

RAID 0 is useful for setups such as large read-only NFS servers where mounting many disks is time-consuming or impossible and redundancy is irrelevant. Another use is where the number of disks is limited by the operating system. In Microsoft Windows, the number of drive letters for hard disk drives may be limited to 24, so RAID 0 is a popular way to use more disks. It is also a popular choice for gaming systems where performance is desired, data integrity is not very important, but cost is a consideration to most users. However, since data is shared between drives without redundancy, hard drives cannot be swapped out as all disks are dependent upon each other.

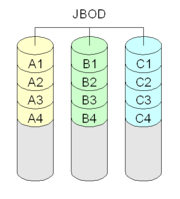

Concatenation (JBOD)

Although a concatenation of disks (also called JBOD, or "Just a Bunch of Disks") is not one of the numbered RAID levels, it is a popular method for combining multiple physical disk drives into a single virtual one. As the name implies, disks are merely concatenated together, end to beginning, so they appear to be a single large disk.

In this sense, concatenation is akin to the reverse of partitioning . Whereas partitioning takes one physical drive and creates two or more logical drives, JBOD uses two or more physical drives to create one logical drive.

In that it consists of an Array of Independent Disks (no redundancy), it can be thought of as a distant relation to RAID. JBOD is sometimes used to turn several odd-sized drives into one useful drive. Therefore, JBOD could use a 3 GB, 15 GB, 5.5 GB, and 12 GB drive to combine into a logical drive at 35.5 GB, which is often more useful than the individual drives separately.

JBOD is similar to the widely used Logical Volume Manager (LVM) and Logical Storage Manager (LSM) in UNIX and UNIX-based operating systems (OS). JBOD is useful for OSs which do not support LVM/LSM (like MS-Windows, although Windows 2003 Server, Windows XP Pro, and Windows 2000 support software JBOD, known as spanning dynamic disks). The difference between JBOD and LVM/LSM is that the address remapping between the logical address of the concatenated device and the physical address of the disc is done by the RAID hardware instead of the OS kernel as it is LVM/LSM.

One advantage JBOD has over RAID 0 is in the case of drive failure. Whereas in RAID 0, failure of a single drive will usually result in the loss of all data in the array, in a JBOD array only the data on the affected drive is lost, and the data on surviving drives will remain readable. However, JBOD does not carry the performance benefits which are associated with RAID 0.

RAID 1

Traditional

RAID 1

A1 A1

A2 A2

A3 A3

A4 A4

Note: A1, A2, et cetera each represent one data block; each column represents one disk.

A RAID 1 creates an exact copy (or mirror) of a set of data on two or more disks. This is useful when read performance is more important than data capacity. Such an array can only be as big as the smallest member disk. A classic RAID 1 mirrored pair contains two disks, which increases reliability exponentially over a single disk. Since each member contains a complete copy of the data, and can be addressed independently, ordinary wear-and-tear reliability is raised by the power of the number of self-contained copies. For example, consider a model of disk drive with a weekly probability of failure of 1:500. Assuming defective drives are replaced weekly, a two-drive RAID 1 installation would carry a 1:250,000 probability of failure for a given week. That is, the chances that both drives experience an ordinary mechanical failure during the same week is the square of the chances for only one drive.

Additionally, since all the data exists in two or more copies, each with its own hardware, the read performance goes up roughly as a linear multiple of the number of copies. That is, a RAID 1 array of three drives can be reading in three different places at the same time. To maximize performance benefits of RAID 1, independent disk controllers are recommended, one for each disk. Some refer to this practice as splitting or duplexing. When reading, both disks can be accessed independently. Like RAID 0 the average seek time is reduced by half when randomly reading but because each disk has the exact same data the requested sectors can always be split evenly between the disks and the seek time remains low. The transfer rate would also be doubled. For three disks the seek time would be a third and the transfer rate would be tripled. The only limit is how many disks can be connected to the controller and its maximum transfer speed. Many older IDE RAID 1 cards read from one disk in the pair, so their read performance is that of a single disk. Some older RAID 1 implementations would also read both disks simultaneously and compare the data to catch errors. The error detection and correction on modern disks makes this less useful in environments requiring normal commercial availability. When writing, the array performs like a single disk as all mirrors must be written with the data.

RAID 1 has many administrative advantages. For instance, in some 365*24 environments, it is possible to "Split the Mirror": declare one disk as inactive, do a backup of that disk, and then "rebuild" the mirror. This requires that the application support recovery from the image of data on the disk at the point of the mirror split. This procedure is less critical in the presence of the " snapshot" feature of some filesystems, in which some space is reserved for changes, presenting a static point-in-time view of the filesystem. Alternatively, a set of disks can be kept in much the same way as traditional backup tapes are.

Also, one common practice is to create an extra mirror of a volume (also known as a Business Continuance Volume or BCV) which is meant to be split from the source RAID set and used independently. In some implementations, these extra mirrors can be split and then incrementally re-established, instead of requiring a complete RAID set rebuild.

RAID 2

A RAID 2 stripes data at the bit (rather than block) level, and uses a Hamming code for error correction. The disks are synchronized by the controller to run in perfect tandem. This is the only original level of RAID that is not currently used. Extremely high data transfer rates are possible.

RAID 3

Traditional

RAID 3

A1 A2 A3 Ap(1-3)

A4 A5 A6 Ap(4-6)

A7 A8 A9 Ap(7-9)

B1 B2 B3 Bp(1-3)

Note: A1, B1, etcetera each represent one data byte; each column represents one disk.

A RAID 3 uses byte-level striping with a dedicated parity disk. RAID 3 is very rare in practice. One of the side effects of RAID 3 is that it generally cannot service multiple requests simultaneously. This comes about because any single block of data will by definition be spread across all members of the set and will reside in the same location, so any I/O operation requires activity on every disk.

In our example below, a request for block "A" consisting of bytes A1-A9, would require all three data disks to seek to the beginning(A1) and reply with their contents. A simultaneous request for block B would have to wait.

RAID 4

Traditional

RAID 4

A1 A2 A3 Ap

B1 B2 B3 Bp

C1 C2 C3 Cp

D1 D2 D3 Dp

Note: A1, B1, et cetera each represent one data block; each column represents one disk.

A RAID 4 uses block-level striping with a dedicated parity disk. RAID 4 looks similar to RAID 3 except that it stripes at the block, rather than the byte level. This allows each member of the set to act independently when only a single block is requested. If the disk controller allows it, a RAID 4 set can service multiple read requests simultaneously.

In our example below, a request for block "A1" would be serviced by disk 1. A simultaneous request for block B1 would have to wait, but a request for B2 could be serviced concurrently.

RAID 5

Traditional

RAID 5

A1 A2 A3 Ap

B1 B2 Bp B3

C1 Cp C2 C3

Dp D1 D2 D3

Note: A1, B1, et cetera each represent one data block; each column represents one disk.

A RAID 5 uses block-level striping with parity data distributed across all member disks. RAID 5 has achieved popularity due to its low cost of redundancy. Generally RAID 5 is implemented with hardware support for parity calculations.

In the example at right, a read request for block "A1" would be serviced by disk 1. A simultaneous read request for block B1 would have to wait, but a read request for B2 could be serviced concurrently.

Every time a block is written to a disk in a RAID 5, a parity block is generated within the same stripe. A block is often composed of many consecutive sectors on a disk. A series of blocks (a block from each of the disks in an array) is collectively called a "stripe". If another block, or some portion of a block, is written on that same stripe the parity block (or some portion of the parity block) is recalculated and rewritten. For small writes, this requires reading the old data, writing the new parity, and writing the new data. The disk used for the parity block is staggered from one stripe to the next, hence the term "distributed parity blocks". RAID 5 writes are expensive in terms of disk operations and traffic between the disks and the controller.

The parity blocks are not read on data reads, since this would be unnecessary overhead and would diminish performance. The parity blocks are read, however, when a read of a data sector results in a cyclic redundancy check (CRC) error. In this case, the sector in the same relative position within each of the remaining data blocks in the stripe and within the parity block in the stripe are used to reconstruct the errant sector. The CRC error is thus hidden from the main computer. Likewise, should a disk fail in the array, the parity blocks from the surviving disks are combined mathematically with the data blocks from the surviving disks to reconstruct the data on the failed drive "on the fly".

This is sometimes called Interim Data Recovery Mode. The computer knows that a disk drive has failed, but this is only so that the operating system can notify the administrator that a drive needs replacement; applications running on the computer are unaware of the failure. Reading and writing to the drive array continues seamlessly, though with some performance degradation. The difference between RAID 4 and RAID 5 is that, in interim data recovery mode, RAID 5 might be slightly faster than RAID 4, because, when the CRC and parity are in the disk that failed, the calculation does not have to be performed, while with RAID 4, if one of the data disks fails, the calculations have to be performed with each access.

In RAID 5, where there is a single parity block per stripe, the failure of a second drive results in total data loss.

The maximum number of drives in a RAID 5 redundancy group is theoretically unlimited, but it is common practice to limit the number of drives. The tradeoffs of larger redundancy groups are greater probability of a simultaneous double disk failure, the increased time to rebuild a redundancy group, and the greater probability of encountering an unrecoverable sector during RAID reconstruction. As the number of disks in a RAID 5 group increases, the MTBF can become lower than that of a single disk. This happens when the likelihood of a second disk failing out of (N-1) dependent disks, within the time it takes to detect, replace and recreate a first failed disk, becomes larger than the likelihood of a single disk failing. RAID 6 is an alternative that provides dual parity protection thus enabling larger numbers of disks per RAID group.

Some RAID vendors will avoid placing disks from the same manufacturing run in a redundancy group to minimize the odds of simultaneous early life and end of life failures as evidenced by the bathtub curve.

RAID 5 implementations suffer from poor performance when faced with a workload which includes many writes which are smaller than the capacity of a single stripe; this is because parity must be updated on each write, requiring read-modify-write sequences for both the data block and the parity block. More complex implementations often include non-volatile write back cache to reduce the performance impact of incremental parity updates.

In the event of a system failure while there are active writes, the parity of a stripe may become inconsistent with the data; if this is not detected and repaired before a disk or block fails, data loss may ensue as incorrect parity will be used to reconstruct the missing block in that stripe; this potential vulnerability is sometimes known as the "write hole". Battery-backed cache and other techniques are commonly used to reduce the window of vulnerability of this occurring.

RAID 6

Traditional Typical

RAID 5 RAID 6

A1 A2 A3 Ap A1 A2 A3 Ap Aq

B1 B2 Bp B3 B1 B2 Bp Bq B3

C1 Cp C2 C3 C1 Cp Cq C2 C3

Dp D1 D2 D3 Dp Dq D1 D2 D3

Note: A1, B1, et cetera each represent one data block; each column represents one disk;

p and q represent the two Reed-Solomon syndromes.

A RAID 6 extends RAID 5 by adding an additional parity block, thus it uses block -level striping with two parity blocks distributed across all member disks. It was not one of the original RAID levels.

RAID 5 can be seen as a special case of a Reed-Solomon code where the syndrome used is the one built from generator 1 [1]. Thus RAID 5 only requires addition in the galois field. Since we are operating on bytes, the field used is a binary galois field (  ), typically of order 8. In binary galois fields, addition is computed by a simple XOR.

), typically of order 8. In binary galois fields, addition is computed by a simple XOR.

After understanding RAID 5 as a special case of a Reed-Solomon code, it is easy to see that it is possible to extend the approach to produce redundancy simply by producing another syndrome using a different generator; for example, 2 in  . By adding additional generators it is possible to achieve any number of redundant disks, and recover from the failure of that many drives anywhere in the array.

. By adding additional generators it is possible to achieve any number of redundant disks, and recover from the failure of that many drives anywhere in the array.

Like RAID 5 the parity is distributed in stripes, with the parity blocks in a different place in each stripe.

RAID 6 is inefficient when used with a small number of drives but as arrays become bigger and have more drives the loss in storage capacity becomes less important and the probability of two disks failing at once is bigger. RAID 6 provides protection against double disk failures and failures while a single disk is rebuilding. In the case where there is only one array it makes more sense than having a "hot spare" disk.

The user capacity of a RAID 6 array is n-2, where n is the total number of drives in the array.

RAID 6 does not have a performance penalty for read operations, but it does have a performance penalty on write operations due to the overhead associated with the additional parity calculations.

Nested RAID Levels

Many storage controllers allow RAID levels to be nested. That is, one RAID can use another as its basic element, instead of using physical disks. It is instructive to think of these arrays as layered on top of each other, with physical disks at the bottom.

Nested RAIDs are usually signified by joining the numbers indicating the RAID levels into a single number, sometimes with a '+' in between. For example, RAID 10 (or RAID 1+0) conceptually consists of multiple level 1 arrays stored on physical disks with a level 0 array on top, striped over the level 1 arrays. In the case of RAID 0+1, it is most often called RAID 0+1 as opposed to RAID 01 to avoid confusion with RAID 1. Opposed to this, when the top array is a RAID 0 (such as in RAID 10 and RAID 50), most vendors choose to omit the '+', probably because RAID 50 sounds fancier than the more explanatory RAID 5+0.

When nesting RAID levels, a RAID type that provides redundancy is typically combined with RAID 0 to boost performance. With these configurations it is preferable to have RAID 0 on top and the redundant array at the bottom, because fewer disks then need to be regenerated when a disk fails. (Thus, RAID 10 is preferable to RAID 0+1 but the administrative advantages of "splitting the mirror" of RAID 1 would be lost).

RAID 0+1

A RAID 0+1 (also called RAID 01, though it shouldn't be confused with RAID 10) is a RAID used for both replicating and sharing data among disks. The difference between RAID 0+1 and RAID 1+0 is the location of each RAID system — it is a mirror of stripes. Consider an example of RAID 0+1: six 120 GB drives need to be set up on a RAID 0+1. Below is an example where two 360 GB level 0 arrays are mirrored, creating 360 GB of total storage space:

The maximum storage space here is 360 GB, spread across two arrays. The advantage is that when a hard drive fails in one of the level 0 arrays, the missing data can be transferred from the other array. However, adding an extra hard drive to one stripe requires you to add an additional hard drive to the other stripes to balance out storage among the arrays.

It is not as robust as RAID 10 and cannot tolerate two simultaneous disk failures, if not from the same stripe. That is, once a single disk fails, each of the mechanisms in the other stripe is single point of failure. Also, once the single failed mechanism is replaced, in order to rebuild its data all the disks in the array must participate in the rebuild.

To add to the confusion, some controllers that run in RAID 0+1 mode combine the striping and mirroring into a single operation. The layout of the blocks for RAID 0+1 and RAID 10 are identical except that the disks are in a different order. To the smart controller this does not matter and they gain all the benefits of RAID 10 but are still labelled as only supporting RAID 0+1 in their documentation.

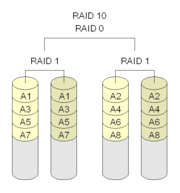

RAID 10

A RAID 10, sometimes called RAID 1+0, or RAID 1&0, is similar to a RAID 0+1 with exception that the RAID levels used are reversed—RAID 10 is a stripe of mirrors. Below is an example where three collections of 120 GB level 1 arrays are striped together to add up to 360 GB of total storage space: All but one drive from each RAID 1 set could fail without damaging the data. However, if the failed drive is not replaced, the single working hard drive in the set then becomes a single point of failure for the entire array. If that single hard drive then fails, all data stored in the entire array is lost.

Extra 120GB hard drives could be added to any one of the level 1 arrays to provide extra redundancy. Unlike RAID 0+1, all the "sub-arrays" do not have to be upgraded simultaneously.

RAID 10 is often the primary choice for high-load databases, because the lack of parity to calculate gives it faster write speeds.

RAID 10 Capacity: (Size of Smallest Drive) * (Even Number of Drives ) / 2

RAID 100 (RAID 10+0)

A RAID 100, sometimes also called RAID 10+0, is a stripe of RAID 10s. RAID 100 is an example of plaid RAID, a RAID in which striped RAIDs are themselves striped together. Below is an example in which four 120 GB RAID 1 arrays are striped and re-striped to add up to 480 GB of total storage space:

RAID 0

/-------------------------------------\

| |

RAID 0 RAID 0

/-----------------\ /-----------------\

| | | |

RAID 1 RAID 1 RAID 1 RAID 1

/--------\ /--------\ /--------\ /--------\

| | | | | | | |

120 GB 120 GB 120 GB 120 GB 120 GB 120 GB 120 GB 120 GB

A1 A1 A2 A2 A3 A3 A4 A4

A5 A5 A6 A6 A7 A7 A8 A8

B1 B1 B2 B2 B3 B3 B4 B4

B5 B5 B6 B6 B7 B7 B8 B8

Note: A1, B1, et cetera each represent one data sector; each column represents one disk.

All but one drive from each RAID 1 set could fail without loss of data. However, the remaining disk from the RAID 1 becomes a single point of failure for the already degraded array. Often the top level stripe is done in software. Some vendors call the top level stripe a MetaLun, or a Soft Stripe.

The major benefits of RAID 100 (and plaid RAID in general) over single-level RAID are better random read performance and the mitigation of hotspot risk on the array. For these reasons, RAID 100 is often the best choice for very large databases, where the underlying array software limits the amount of physical disks allowed in each standard array. Implementing nested RAID levels allows virtually limitless spindle counts in a single logical volume.

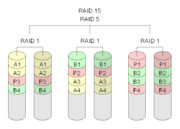

RAID 50 (RAID 5+0)

A RAID 50 combines the straight block-level striping of RAID 0 with the distributed parity of RAID 5. This is a RAID 0 array striped across RAID 5 elements.

Below is an example where three collections of 120 GB RAID 5s are striped together to add up to 720 GB of total storage space:

RAID 0

/-----------------------------------------------------\

| | |

RAID 5 RAID 5 RAID 5

/-----------------\ /-----------------\ /-----------------\

| | | | | | | | |

120 GB 120 GB 120 GB 120 GB 120 GB 120 GB 120 GB 120 GB 120 GB

A1 A2 Ap A3 A4 Ap A5 A6 Ap

B1 Bp B2 B3 Bp B4 B5 Bp B6

Cp C1 C2 Cp C3 C4 Cp C5 C6

D1 D2 Dp D3 D4 Dp D5 D6 Dp

Note: A1, B1, et cetera each represent one data block; each column represents one disk; Ap, Bp,

et cetera each represent parity information for each distinct RAID 5 and may represent different

values across the RAID 0 (that is, Ap for A1 and A2 can differ from Ap for A3 and A4).

One drive from each of the RAID 5 sets could fail without loss of data. However, if the failed drive is not replaced, the remaining drives in that set then become a single point of failure for the entire array. If one of those drives fails, all data stored in the entire array is lost. The time spent in recovery (detecting and responding to a drive failure, and the rebuild process to the newly inserted drive) represents a period of vulnerability to the RAID set.

In the example below, datasets may be striped across both RAID sets. A dataset with 5 blocks would have 3 blocks written to the first RAID set, and the next 2 blocks written to RAID set 2.

RAID Set 1 RAID Set 2

A1 A2 A3 Ap A4 A5 A6 Ap

B1 B2 Bp B3 B4 B5 Bp B6

C1 Cp C2 C3 C4 Cp C5 C6

Dp D1 D2 D3 Dp D4 D5 D6

Note: A1, B1, et cetera each represent one data block; each column represents one disk.

The configuration of the RAID sets will impact the overall fault tolerance. A construction of three seven-drive RAID 5 sets has higher capacity and storage efficiency, but can only tolerate three maximum potential drive failures. A construction of seven three-drive RAID 5 sets can handle as many as seven drive failures but has lower capacity and storage efficiency.

RAID 50 improves upon the performance of RAID 5 particularly during writes, and provides better fault tolerance than a single RAID level does. This level is recommended for applications that require high fault tolerance, capacity and random positioning performance.

As the number of drives in a RAID set increases, and the capacity of the drives increase, this impacts the fault-recovery time correspondingly as the interval for rebuilding the RAID set increases.

Proprietary RAID levels

Although all implementations of RAID differ from the idealized specification to some extent, some companies have developed entirely proprietary RAID implementations that differ substantially from the rest of the crowd.

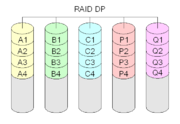

Double parity

One common addition to the existing RAID levels is double parity, sometimes implemented and known as diagonal parity [2]. As in RAID 6, there are two sets of parity check information created. Unlike RAID 6, the second set is not another set of points in the overdefined polynomial which characterizes the data. Rather, double parity calculates the extra parity against a different group of blocks. For example, in our graph both RAID 5 and RAID 6 calculate against all A-lettered blocks to produce one or more parity blocks. However, as it is fairly easy to calculate parity against multiple groups of blocks, instead of just A-lettered blocks, one can calculate all A-lettered blocks and a permuted group of blocks.

This is more easily illustrated using RAID 4, Twin Syndrome RAID 4 (RAID 6 with a RAID 4 layout which is not actually implemented), and double parity RAID 4.

Traditional Twin Syndrome Double parity

RAID 4 RAID 4 RAID 4

A1 A2 A3 Ap A1 A2 A3 Ap Aq A1 A2 A3 Ap 1n

B1 B2 B3 Bp B1 B2 B3 Bp Bq B1 B2 B3 Bp 2n

C1 C2 C3 Cp C1 C2 C3 Cp Cq C1 C2 C3 Cp 3n

D1 D2 D3 Dp D1 D2 D3 Dp Dq D1 D2 D3 Dp 4n

Note: A1, B1, et cetera each represent one data block; each column represents one disk.

The n blocks are the double parity blocks. The block 2n would be calculated as A2 xor B3 xor Cp, while 3n would be calculated as A3 xor Bp xor C1 and 1n would be calculated as A1 xor B2 xor C3. Because the double parity blocks are correctly distributed it is possible to reconstruct two lost data disks through iterative recovery. For example, B2 could be recovered without the use of any x1 or x2 blocks by computing B3 xor Cp xor 2n = A2, and then A1 can be recovered by A2 xor A3 xor Ap. Finally, B2 = A1 xor C3 xor 1n.

Running in degraded mode with a double parity system is not advised.

RAID 1.5

RAID 1.5 is a proprietary RAID by HighPoint and is sometimes incorrectly called RAID 15. From the limited information available it appears that it's just a correct implementation of RAID 1. When reading, the data is read from both disks simultaneously and most of the work is done in hardware instead of the driver.

RAID 7

RAID 7 is a trademark of Storage Computer Corporation . It adds caching to RAID 3 or RAID 4 to improve performance.

RAID S or Parity RAID

RAID S is EMC Corporation's proprietary striped parity RAID system used in their Symmetrix storage systems. Each volume exists on a single physical disk, and multiple volumes are arbitrarily combined for parity purposes. EMC originally referred to this capability as RAID S, and then renamed it Parity RAID for the Symmetrix DMX platform. EMC now offers standard striped RAID 5 on the Symmetrix DMX as well.

Traditional EMC

RAID 5 RAID S

A1 A2 A3 Ap A1 B1 C1 1p

B1 B2 Bp B3 A2 B2 C2 2p

C1 Cp C2 C3 A3 B3 C3 3p

Dp D1 D2 D3 A4 B4 C4 4p

Note: A1, B1, et cetera each represent one data block; each column represents one disk.

A, B, et cetera are entire volumes.

Matrix RAID

Matrix RAID is a feature that first appeared in the Intel ICH6R RAID BIOS. It is not a new RAID level. Matrix RAID utilizes two physical disks. Part of each disk is assigned to a level 0 array, the other part to a level 1 array. Currently, most (all?) of the other cheap RAID BIOS products only allow one disk to participate in a single array. This product targets home users, providing a safe area (the level 1 section) for documents and other items that one wishes to store redundantly, and a faster area for operating system, applications, etc.

Linux MD RAID 10

The Linux kernel software RAID driver (called md, for "multiple disk") can be used to build a classic RAID 1+0 array, but also has a single level RAID 10 driver with some interesting extensions.

In particular, it supports k-way mirroring on n drives when k does not divide n. This is done by repeating each chunk k times when writing it to an underlying n-way RAID 0 array. For example, 2-way mirroring on 3 drives would look like

A1 A1 A2

A2 A3 A3

A4 A4 A5

A5 A6 A6

This is obviously equivalent to the standard RAID 10 arrangement when k does divide n.

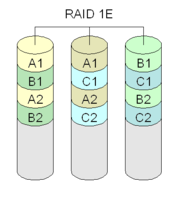

IBM ServeRAID 1E

The IBM ServeRAID adapter series supports 2-way mirroring on an arbitrary number of drives.

This configuration is tolerant of non-adjacent drives failing. Other storage systems including Sun's StorEdge T3 support this mode as well.

RAID Z

Sun's ZFS implements an integrated redundancy scheme similar to RAID 5 which it calls RAID Z. RAID Z avoids the RAID 5 "write hole" [3] and the need for read-modify-write operations for small writes by only ever performing full-stripe writes; small blocks are mirrored instead of parity protected, which is possible because the filesystem is aware of the underlying storage structure and can allocate extra space if necessary.

Reliability of RAID configurations

- Failure rate

- The mean time to failure (MTTF) of a given RAID may be lower or higher than those of its constituent hard drives, depending on what type of RAID is employed.

- Mean time to data loss (MTTDL)

- In this context, the average time before a loss of data in a given array.

- Mean time to recovery (MTTR)

- In arrays that include redundancy for reliability, this is the time following a failure to restore an array to its normal failure-tolerant mode of operation. This includes time to replace a failed disk mechanism as well as time to re-build the array ( i.e. to replicate data for redundancy).

- Unrecoverable bit error rate (UBE)

- This is the rate at which a disk drive will be unable to recover data after application of cyclic redundancy check (CRC) codes and multiple retries. This failure will present as a sector read failure. Some RAID implementations protect against this failure mode by remapping the bad sector, using the redundant data to retrieve a good copy of the data, and rewriting that good data to the newly mapped replacement sector. The UBE rate is typically specified at 1 bit in 10 15 for enterprise class disk drives (SCSI, FC, SAS) , and 1 bit in 1014 for desktop class disk drives (IDE, ATA, SATA). Increasing disk capacities and large RAID 5 redundancy groups have led to an increasing inability to successfully rebuild a RAID group after a disk failure because an unrecoverable sector is found on the remaining disks. Double protection schemes such as RAID 6 are attempting to address this issue, but suffer from a very high write penalty.

- Atomic Write Failure

- Also known by various terms such as torn writes, torn pages, incomplete writes, interrupted writes, etc. This is a little understood and rarely mentioned failure mode for redundant storage systems. Database researcher Jim Gray wrote "Update in Place is a Poison Apple" during the early days of relational database commercialization. However, this warning largely went unheeded and fell by the wayside upon the advent of RAID, which many software engineers mistook as solving all data storage integrity and reliability problems. Many software programs update a storage object "in-place"; that is, they write a new version of the object on to the same disk addresses as the old version of the object. While the software may also log some delta information elsewhere, it expects the storage to present "atomic write semantics", meaning that the write of the data either occurred in its entirety or did not occur at all. However, very few storage systems provide support for atomic writes, and even fewer specify their rate of failure in providing this semantic. Note that during the act of writing an object, a RAID storage device will usually be writing all redundant copies of the object in parallel. Hence an error that occurs during the process of writing may leave the redundant copies in different states, and furthermore may leave the copies in neither the old nor the new state. The little known failure mode is that delta logging relies on the original data being either in the old or the new state so as to enable backing out the logical change, yet few storage systems provide an atomic write semantic.

src="http://pagead2.googlesyndication.com/pagead/show_ads.js">